Mum, I want a nuclear power station

Outsourcing vs in-house development

Companies always have to choose between various options to reduce costs and maximise benefits. They must decide whether to rent or buy offices, to externalise or otherwise certain business functions: with outsourcing, offshoring and other strategies that, in the end, help develop the core competence of the company without submitting it to increases or decreases in the size of the workforce or property required according to demand.

In the IT field, such decisions are normally related to software development: the typical ‘do we do it in-house or externally?’ question. But it is not very common to apply similar strategies to other areas in the IT field.

In the area of IT infrastructure (where the company’s services run), such thoughts are often dismissed because the criticality of the applications leads to thoughts that it is not a good idea to delegate their operation, not even a small part of them, to a third party for fear of losing ‘total control’. Right now, and with increasing frequency – above all in the internet company sector – there is an increasing perception that it is not necessary for the company to develop its own infrastructure to run its applications. Some even decide that they don’t even need their own infrastructure to develop their core business.

If we translate the problem to a more common equivalent – when we flick a switch to turn on a light, we want illumination and we don’t really care where the electricity is produced, we simply want the thing to work. We don’t need a nuclear power station at home to light a bulb, but if we have a company and electricity is the key to developing our business, we will surely want to maintain the electricity supply in case of failure. In such cases it would be more practical to think of having multiple failover providers other than becoming our own provider.

Utility computing: IT like water or electricity

For years this thought has been shaped by the leading computer companies who want IT converted into just another commodity, such as electricity, gas or water, for client companies.

Currently certain components such as computation or storage are being commoditised to a sufficiently mature level that they can be used by companies in production environments, something which is important because it permits economies of scale in supply companies who can therefore offer a better service at a better price.

So, we could have our application running on virtual servers which we pay for according to usage, our backups or content to serve could be on remote storage servers, meaning that we would avoid spending on backup and storage devices (the cost of a good backup system can be significant). We could serve certain content by employing content delivery networks (giving the impression of having a data centre near the client) and bit by bit externalise certain infrastructure services to specialist providers reducing costs and improving the quality of service.

We could go even further and adapt certain components to be available such that they are provided by specialist 3rd party companies. This way we would avoid the development and maintenance costs, which in certain cases can be an important part of the budget for an application. We could optimise and externalise if it is not adding value to our application.

Digital signature on demand

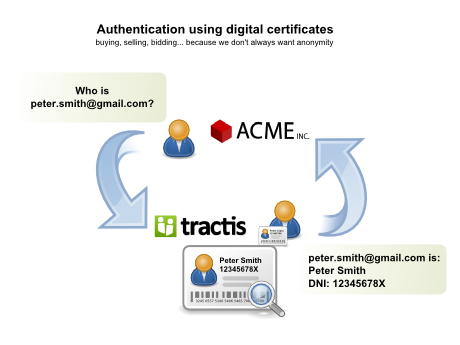

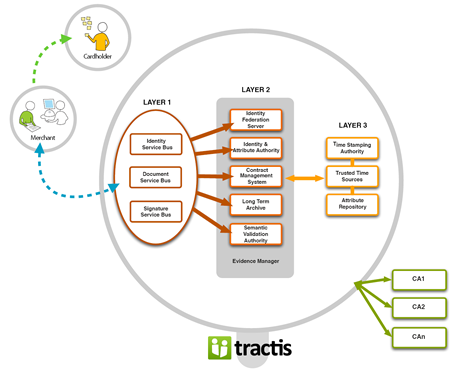

Within this type of components ripe for being provided by specialised 3rd parties, we find public key and digital signature infrastructure, more and more vital in applications, above all in the internet world, but which are complex and are non-trivial to develop.

In this field, there is a tendency to use existing libraries in the product or install already developed products – open source or commercial – from third parties. The problem is that development, installation and maintenance of these types of solution is not very accessible.

They’re only within reach of companies that have huge budgets and, even in these cases, they often lack the know-how or don’t budget for maintenance and continuous improvement of the infrastructure. The result is deployments that don’t represent the total cost of ownership (TCO), quickly become obsolete, are deployed in a buggy state or even aren’t deployed at all.

The field of public key infrastructure was the first of the two to make a move and now today certain activities, such as the role of issuing certificates, is limited to a small group of specialist providers, given that it is an activity that requires an large amount of money and strength to put into production.

People are still waking up to the complexity of digital signature infrastructures and while very few consider developing their own certification agency, it is common to attempt to develop a digital signature authority (be it for creation, validation, signing or storage).

A good idea is to apply a similar criteria to that of certification agencies and delegate the digital signature services to a third party and therefore concentrate on developing our main business activity.

In fact, more and more public institutions and private companies in Spain are making use of third party platforms that offer public key and digital signature infrastructure services, opening a new IT market and reinforcing the idea that such critical jobs should be centralised to specialised providers who can take advantage of economies of scale to optimise cost and maximise the quality of the end product.

We believe that this is an important idea since the domains of public key infrastructure and digital signature should be something that companies can integrate in their applications without the need for large sums of money or effort as has been the case till now. We realise how complicated it can be to solve these problems because we too have been there and for this reason we decided to open our digital signature services and offer them as third-party services because in the Internet, security should be a commodity, not a luxury.

By David García

Saved in: e-Signatures, Technology, Tractis | No comments » | 19 March 2008